WEEK IX

Inputs & Outputs

For the week, I worked on a series of Machine Learning experiments alongside Arduino IDE and Google Teachable Machines.

PHASE 1

Gathering

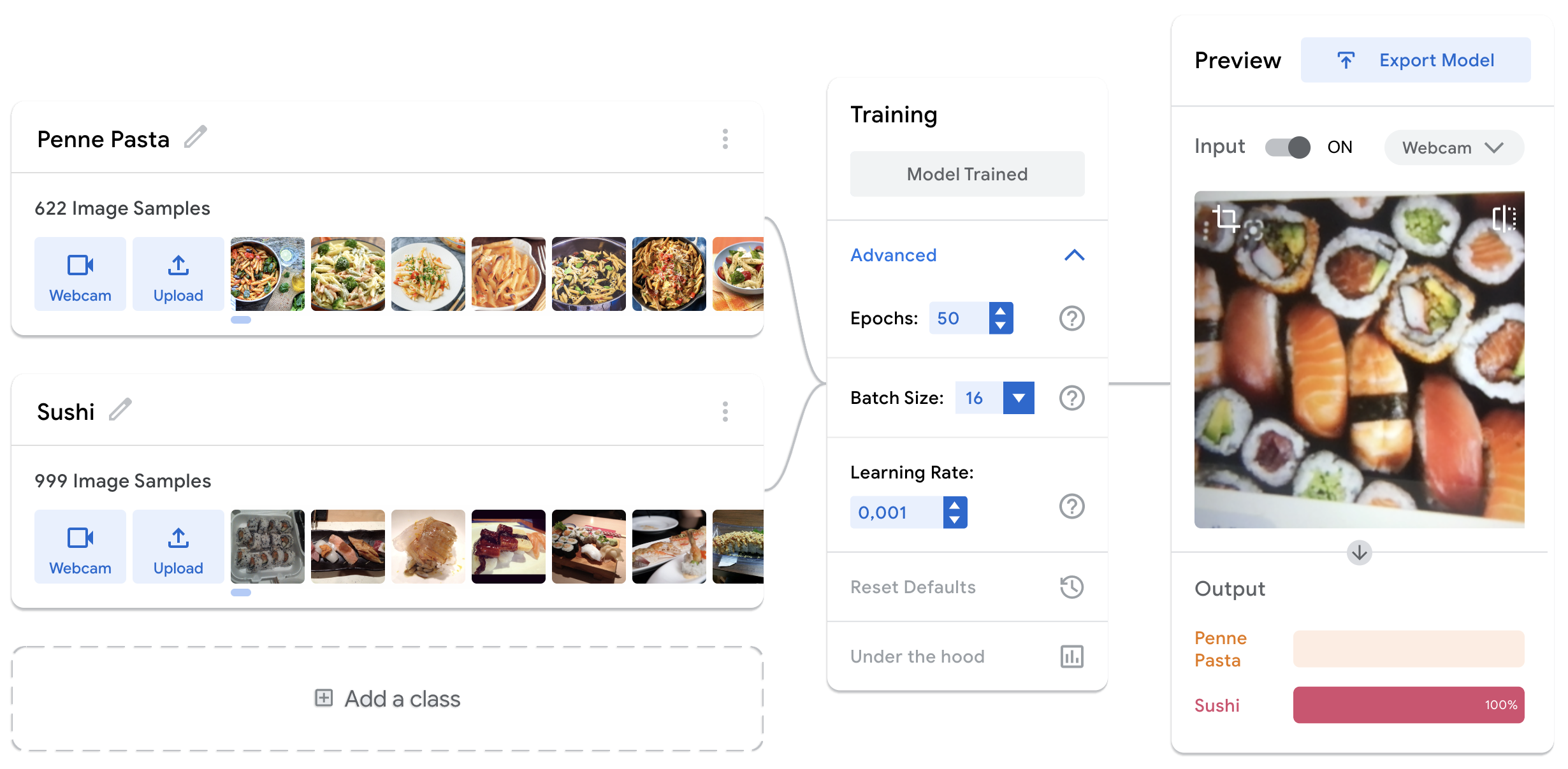

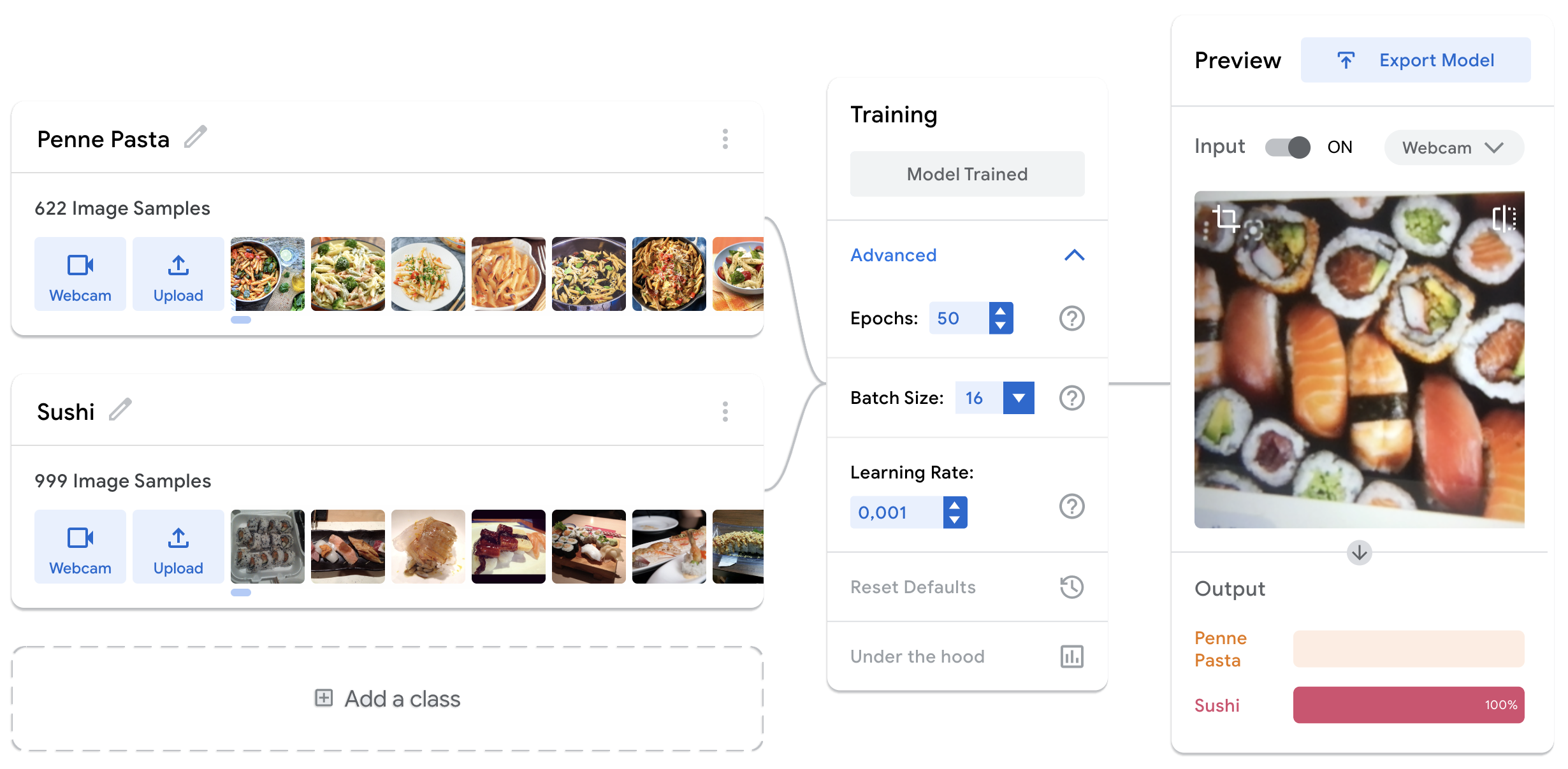

To create a legitimate data set, certain open libraries and data from Kaggle and Imaging & Vision were used. The images involved a lot of manual segregation and cleaning which took 2 days at least. For the first iteration, the Image Model on Google Teachable Machines was used. The platform is undeniably great at demystifying the blackbox of Machine Learning and AI to the individuals that are just getting started.

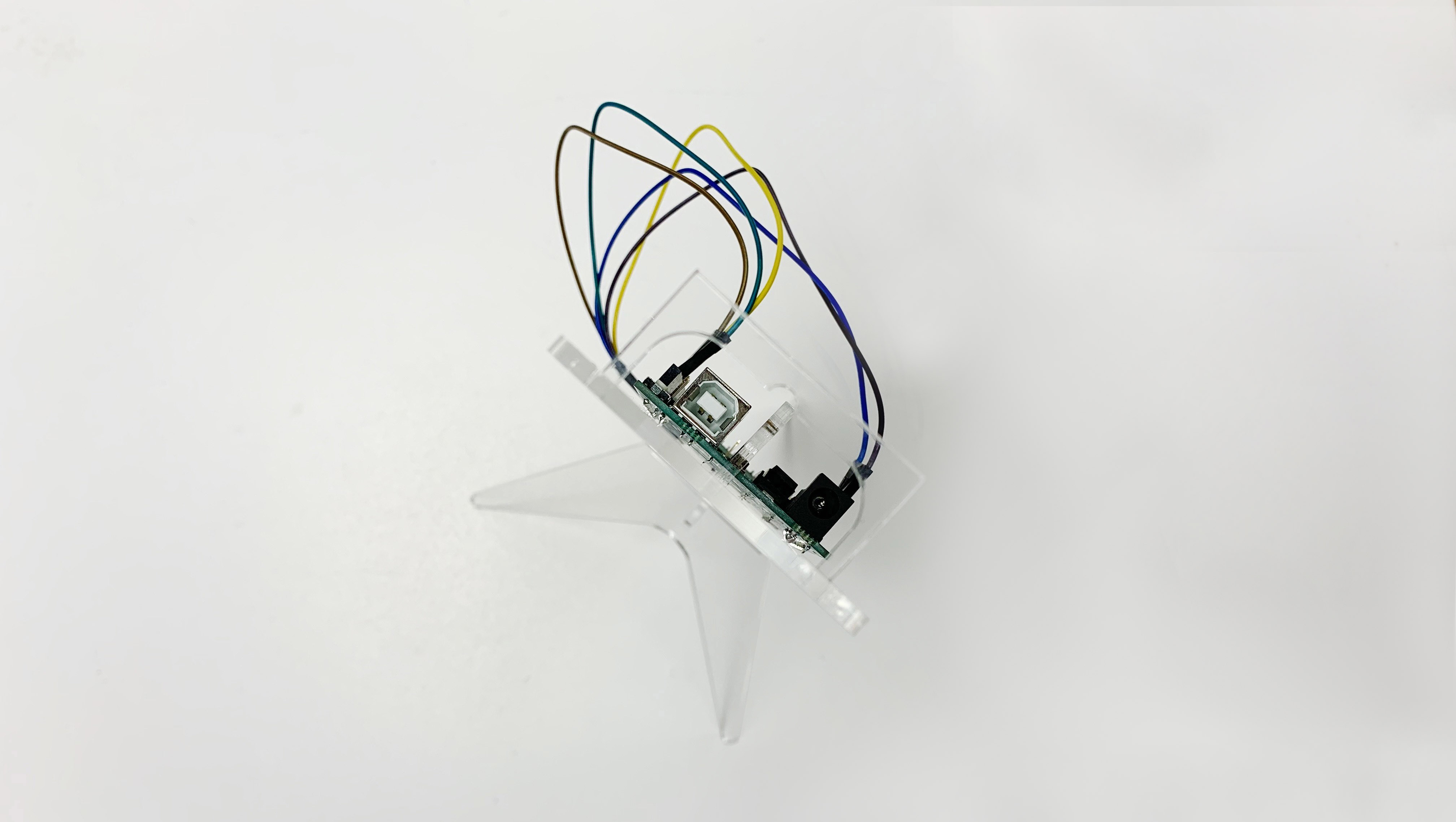

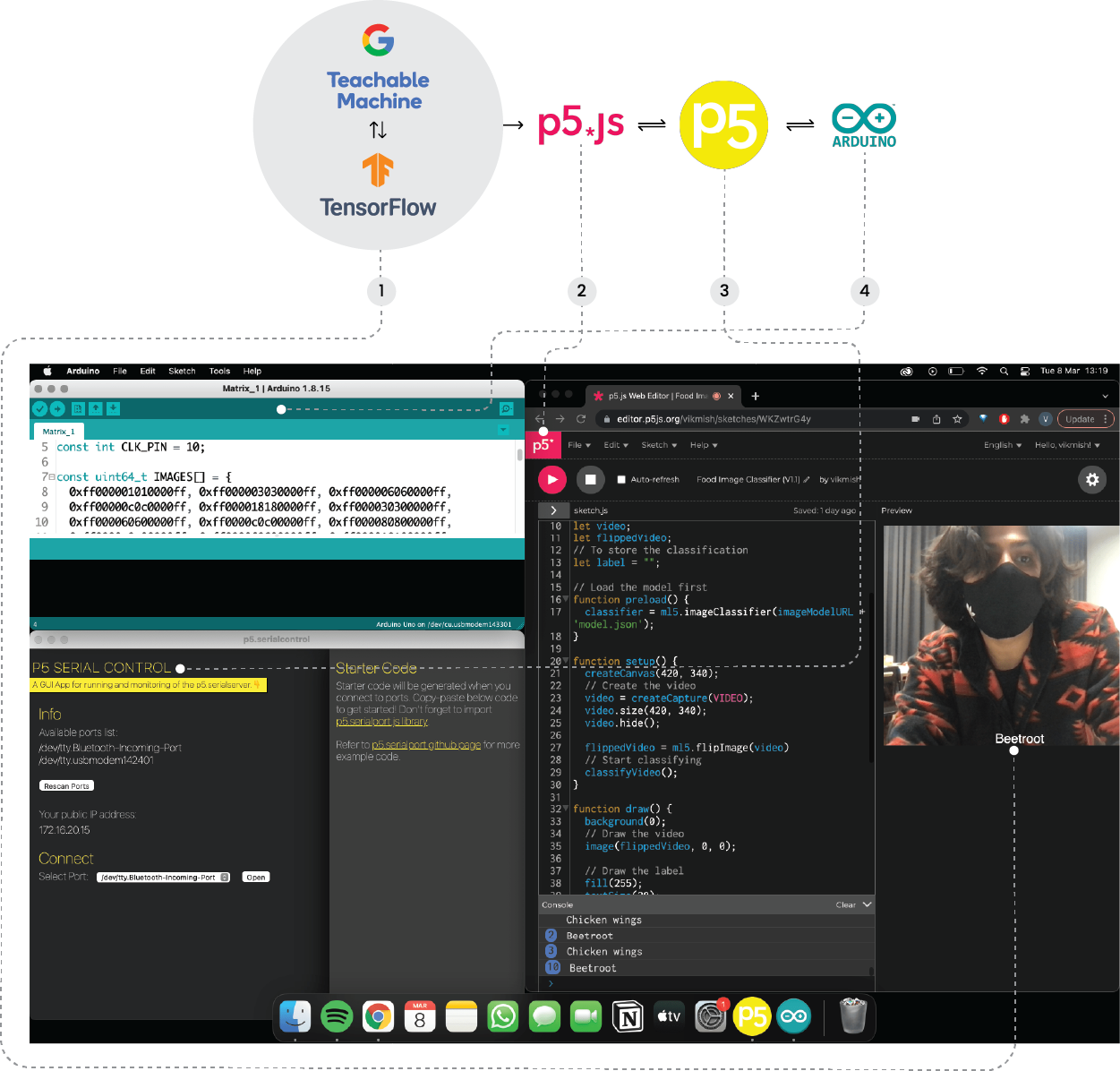

Fig. 9.1 The first-level iteration with two classes

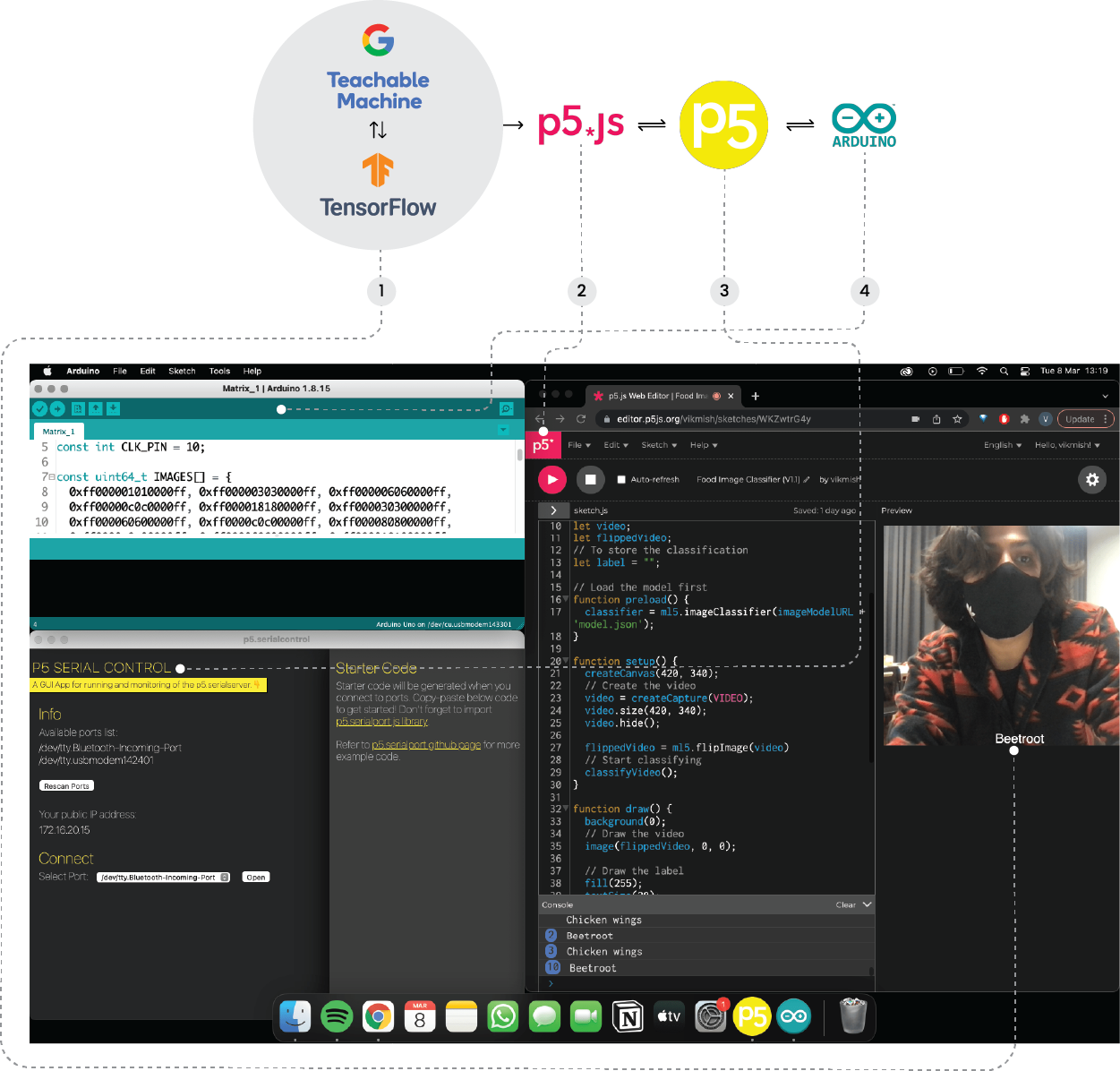

After creating a Teachable Machine model consisting of 40 classes, it was exported as a TensorFlow Module. This was then run and incorporated with a p5.js sketch by The Coding Train, using Javascript programming language. The sketch used the local webcam as an input to detect what it was being shown. The results were displayed alongside a video output + text on the preview screen. Initial tests involved pointing random visuals at the camera to check the responses. For the first 5 tests, it showed the results accurately. The model possessed 68% accuracy which is apparently not the worst to begin with in the world of Machine Learning and AI.

PHASE 2

Making Sense

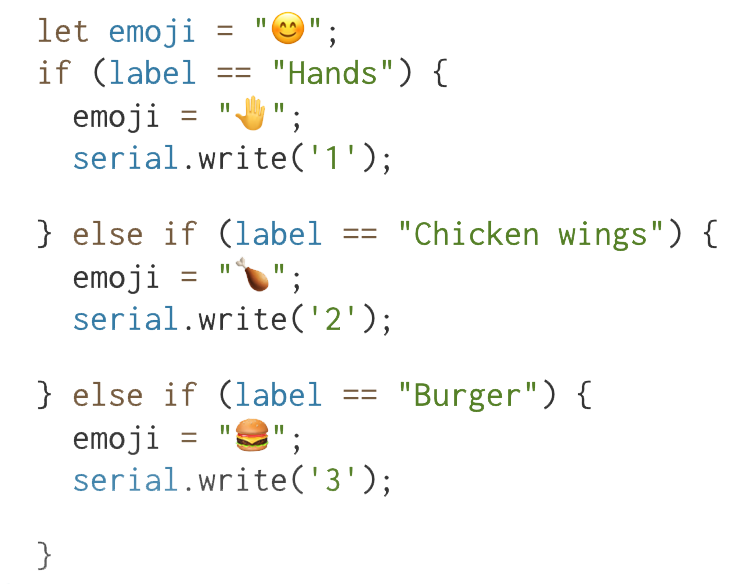

This bit was mostly about experimentation and having fun with the data. Using certain functions in p5.js like let emoji, you can assign an emoticon when a certain value is detected in the sketch. As a part of the experimentation I tried assigning a few emojis to certain foods like burger, chicken wings etc. The results were funny and mildly problematic because it was detecting people with deeper skin tone as chicken wings or falafel and people with lighter tone as gyozas or dumplings. Slightly racist, I must say. 😉

PHASE 3

Communication

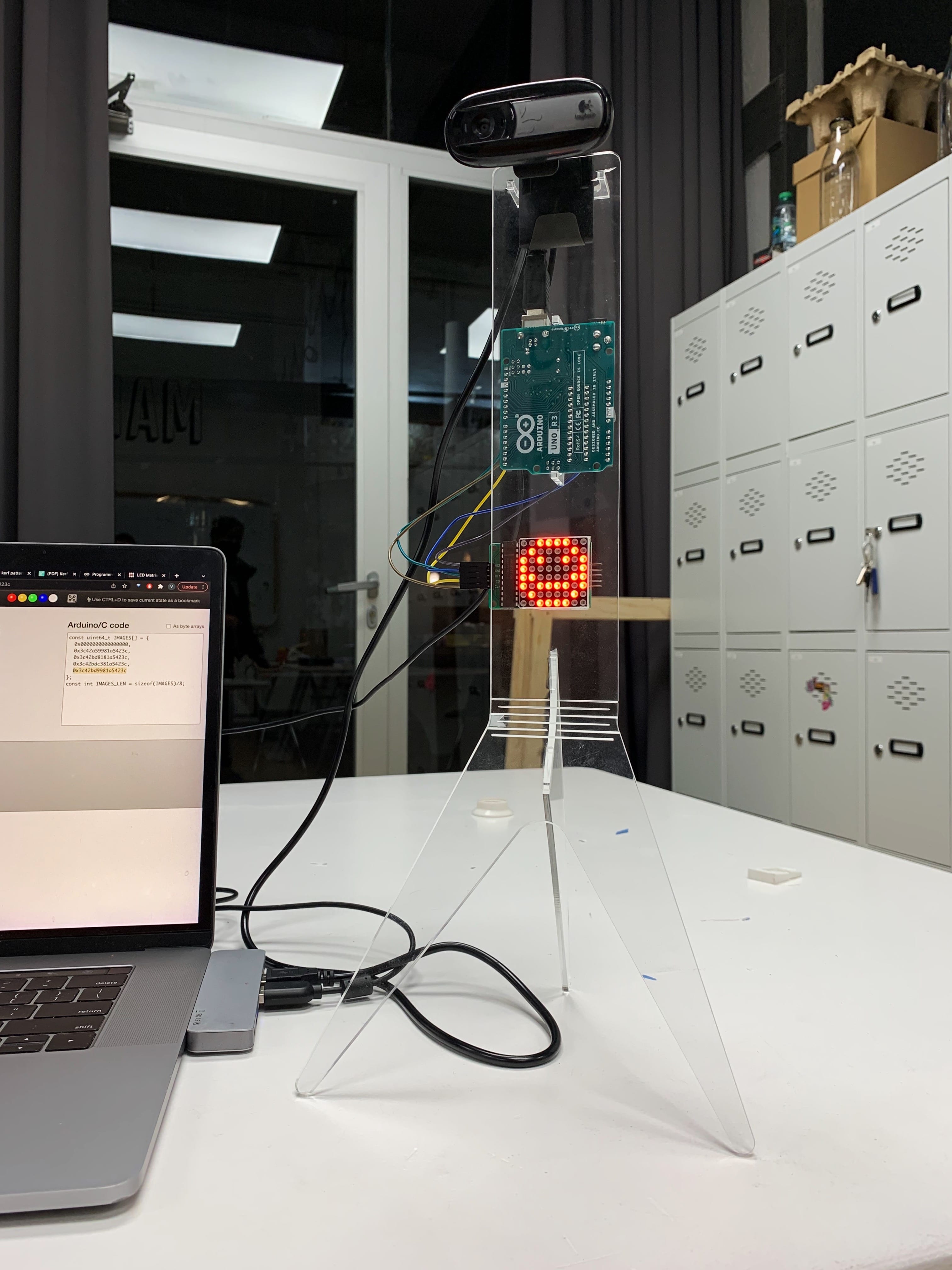

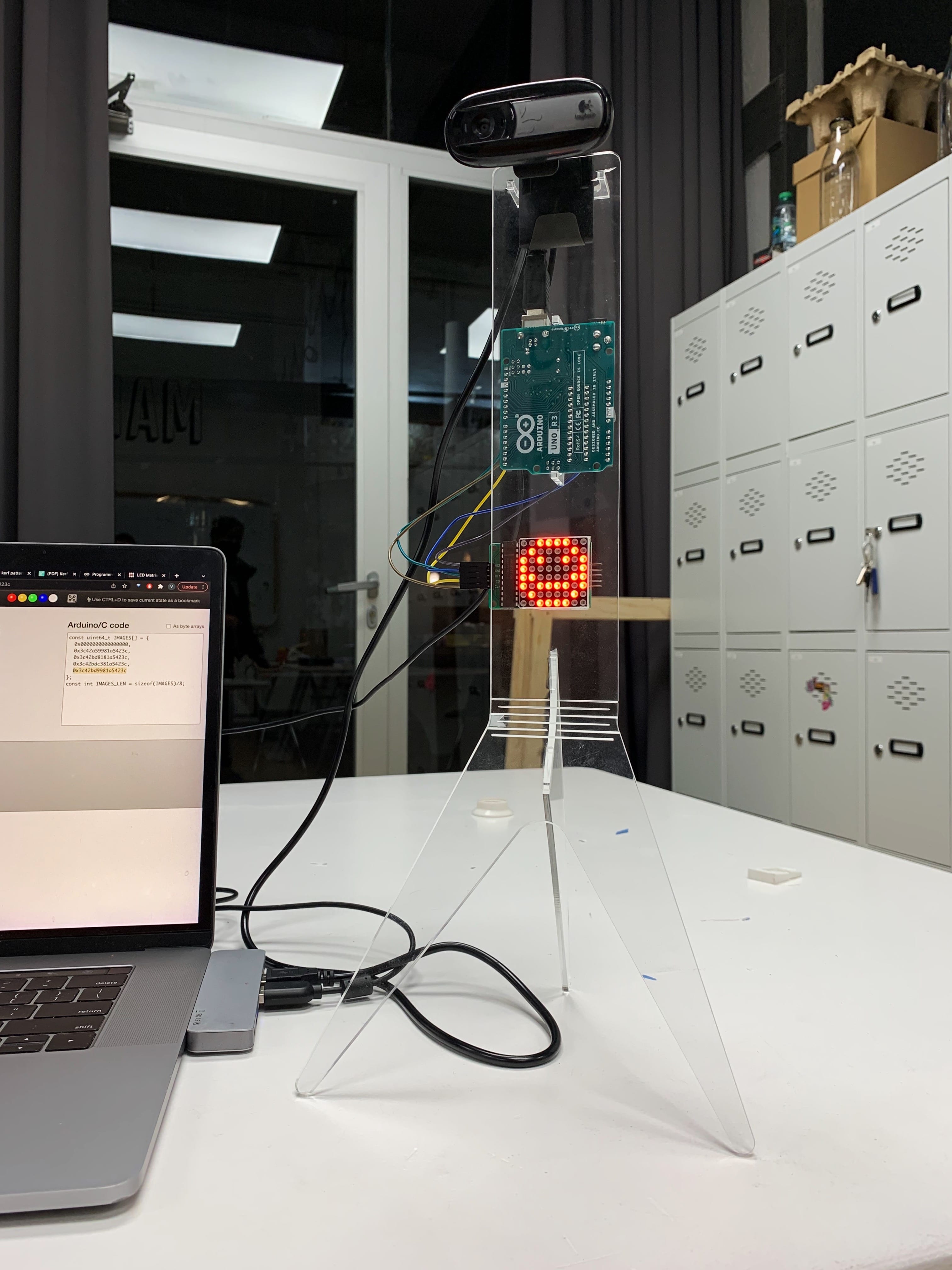

The process of gathering and making sense of the data (algorithmically) took over 2 weeks. The second phase involved figuring out the tools and agents that could be used to carry the detected subject, variable or message, which is currently undecided. The aim was to keep the first message easy to understand and communicate, which led to exploring multiple visual stimulus like LEDs and matrices. But before even jumping onto the electronics bit, a complex part to discover and understand was Serial communication. It is the process of sending data one bit at a time, sequentially, over a communication channel or computer bus.

This is essential when one is trying to communicate amongst multiple electronic devices or interfaces. In this case, to display a variable on LED matrix, the serial communication needs to happen between the origin i.e p5.js to the Arduino Micro-controller. This process needed a rigorous amount of research, sourcing and installation of libraries that make the communication happen. The article by ITP NYU and another one on Medium were a good starting point.

Fig. 9.2 The workflow across different platforms and the physical setup for demonstration (left to right)